Oracle SOA Suite 12c introduced a new product called Managed File Transfer (MFT). Basically MFT is a centralized file transfer solution for enterprises which addresses several pain points wrt. file transfers

You have to restart the MFT managed server after changing these settings.

- It provides secure access to file transfers, tracking each and every step of the end to end file transmission.

- Easy to use UI for designing, monitoring and administering the file transfer solution which can be used by non-technical staff as well

- Extensive reporting capability with detailed status tracking and options to resubmit failed transfers.

- Built-in support for many pre and post processing actions like compression/decompression, encryption/decryption(PGP etc.) which in turn means no need to write custom code for these actions.

- Out of the box support for many technologies including SOA and OSB which in turn leads to multiple integration pattern possibilities. Example: for a large payload processing MFT can simply consume the source file and pass it as a reference to SOA target for chunk read and transformation.

- Integration with ESS (Enterprise Scheduler Service) which is another new product feature in 12c allowing to create flexible schedules for the file transfers without having to write any custom code (quartz, control M scripts, cronjobs etc).

Even though the centralized monitoring dashboard in MFT console is robust and provides granular details of each phase of file transfer (eg: if the target systems are SOA it has embedded links to the EM Console for tracing the status there as well.) there maybe a need to send out email alerts for reporting file transfer failures to a support distribution list in a typical production environment (cannot expect people to keep monitoring the dashboard all the time).

Below are high level steps to enable email notifications in MFT 12c.

Step1:

Run the below WLST commands in sequence to enable the event and add contact for email notification:

o cd ${MW_HOME}/mft/common/bin

o ./wlst.sh

o connect("weblogic","xxxx","t3://hostname:port")

o updateEvent('RUNTIME_ERROR_EVENT', true)

o createContact('Email', 'abc@xyz.com' )

o addContactToNotification('RUNTIME_ERROR_EVENT', 'Email', 'abc@xyz.com')

o disconnect()

o exit()

Step2:

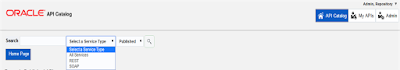

Configure the Email Driver for notifications. Basically set the Outgoing Mail Server and the port (mandatory ones)

You have to restart the MFT managed server after changing these settings.

Step3:

Try replicating a file transfer error and monitor the error both in Monitoring Dashboard as well as check for the error email in outlook.

NOTE:

If event listed above is not enabled or no contacts are specified, then notification messages are sent to JMS “MFTExceptionQueue”.