As important it is for an enterprise to move towards Service Oriented Architecture to bring the necessary agility to its business, equally important it becomes for it to implement a SOA Governance Framework. Services left ungoverned lead to redundancy/duplication, non-standards based development and faster time to market. SOA Governance controls the service lifecycle right from inception, design, build till the decommissioning of service. It helps increase service re-use and also provides tools/reports for tracking the usage of services across enterprise thereby provide an accurate measure of the ROI.

Oracle's 12c fusion middleware stack provides two SOA Governance products:

- Oracle Enterprise Repository (OER)

- Oracle API Catalog (OAC)

OER provides robust design-time as well as run-time governance support for the service lifecycle, enabling storage and management of extensible metadata for composites, service, business processes and other IT related artifacts.

OAC on the other hand is a lightweight SOA governance tool, allowing you to build a catalog of your enterprise's APIs. It provides a simplified metadata model for API assets along with easy search capabilities on the console.

I won't be covering in-depth details about each product as there are some great content regarding that online like

In-Depth look of API Catalog 12c. Having evaluated both products what I intent to do with this blogspot is to try and do a feature comparison between the 2 products in the important areas. Hope this gives a high level overview of the features available as well as helps in making right decision of choosing the correct tool.

User-Role Mapping

OER :

There are several roles available in OER and based on the organization need all of these roles can be leveraged at various phases of the Service Lifecycle. The OER Admin screen provides the ability to control the Access setting for each of this role. The primary role for reviewing/approving assets is

Registrar. Apart from that we have

User role which has read only privilege,

project architects/advanced submitters who can edit the asset, there are several

administrator roles which can perform different level of admin tasks (granular segregation of duties).

OAC:

OAC has a simplified role structure with just 3 roles available for various functions.

Developer – Can search APIs (SOAP/REST) and use those,

Curator – Reviews API, can edit API to enrich it with additional metadata, Publishes the API

Admin – Can harvest the API from command line and perform other admin tasks.

Projects-Departments

OER :

Projects are the primary means of gathering metrics in OER. OER tracks assets produced by projects, as well as assets consumed by projects. In order for a user to access any files associated with assets in OER, the user must be assigned to a project. Both Users and Projects are assigned to Departments. This is convenient from a reporting standpoint, as organizations can then track the production and consumption of reusable assets to a specific department. OER allows creation of both projects and departments apart from the Default Department and Common Project.

OAC:

OAC doesn't allow creation of custom projects and all API assets are published/tracked under default "Common Project". It however allows creation of custom Departments.

Asset Data Model

OER :

OER provides a base set of data and option to import harvester solution pack which includes asset types specific for SOA artifacts (BPEL/OSB). Most of the SOA code artifacts when harvested get mapped to one of these asset types underneath. Example: a SCA project upon harvesting will create several OER assets like Composite, Business Process:BPEL, ComponentType, XSD, XSLT, WSDL, JCA, FaultPolicies , Adapter, Interface, Endpoint, Service etc.

There is also an option to create custom Asset types if the need arises. For example: we can create document artifacts to capture Requirements Spec, Technical Design Document etc. to map to various stages of the Service Lifecycle (SDLC).

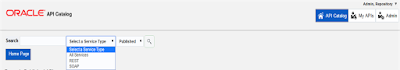

OAC:

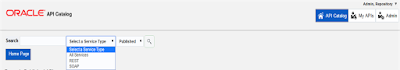

OAC follows a simplified meta data model for the API assets. It classifies the API assets as either REST or SOAP and every code artifact harvested (BPEL or OSB) get categorized into either of this service type as shown below.

Also OAC doesn’t provide an option to create any other custom asset type from the console/command line.

Asset Approval Workflow

OER :

Each phase of service lifecycle has assets which follow the various states as they move through the Governance approval workflow.

Basically asset harvested from command line/console goes to

submitted-pending review state. Once the Registrar Accepts and Approves it upon reviewing, it moves to the

submitted-under review state. As further approval happens and finally Registrar Registers it the asset state moves to

Registered state. Only Registered assets are available for consumption to Users/Developers.

OAC:

OAC follows a simplified approval workflow for the API assets. Every API asset harvested from command line is in

Draft state in OAC. The Curator will login to OAC and can review the asset, edit it with additional metadata or documentation and finally change the API status to

Published. Only published APIs are available for consumption to Developers.

Harvesting Assets

OER :

OER allows asset submission/harvesting from both OER console as well as command line tool.

From command line, we need to edit the HarvesterSettings.xml file to ensure OER connection credentials and SOA/OSB project details are specified correctly and then execute either harvester.sh for SOA or osb-harvester.sh for OSB artifacts.

OAC :

OAC doesn’t allow any harvesting from its Console. You can however use the same harvester scripts of OER to do command line harvesting for API assets into OAC.

NOTE: Both OAC and OER use the same installer and command line tools.

System Settings

OER :

OER Admin screen provides a great set of administration tools, covering lot of areas like Reporting, Notifications, SFID for usage tracking, LDAP/SSO integrations, Policy Management etc.

OAC:

OAC Admin screen has comparatively limited set of administration functions with no support for Notification Emails or Reporting. However you do have support for LDAP/Active Directory integration.

Usage Tracking & Reporting

OER :

You can either use the OER Console to Use-Download an asset and its usage gets tracked against corresponding project. It prompts you to select the project on screen.

The other way to consume an asset is from Jdeveloper/IDE by downloading the OER plugin. Once you enable SFID, the automated usage tracking is detected by OER.

OER provides out-of-the-box reports which can be used for measuring the overall productivity of project teams, show the quality, status, and value of the asset portfolio. This requires a separate installation and integration with Oracle BI Publisher 11g as the reports get rendered in BIP.

OAC:

OAC also provides similar options to track usage of API assets from console as well as Jdeveloper. From console the Developer has to click on link Add to MyAPI for the tracking to begin for any Published API asset.

However there is no Reporting feature available in OAC.